My Projects

Ongoing

Vision-Based Autonomous Agricultural Robot

Description

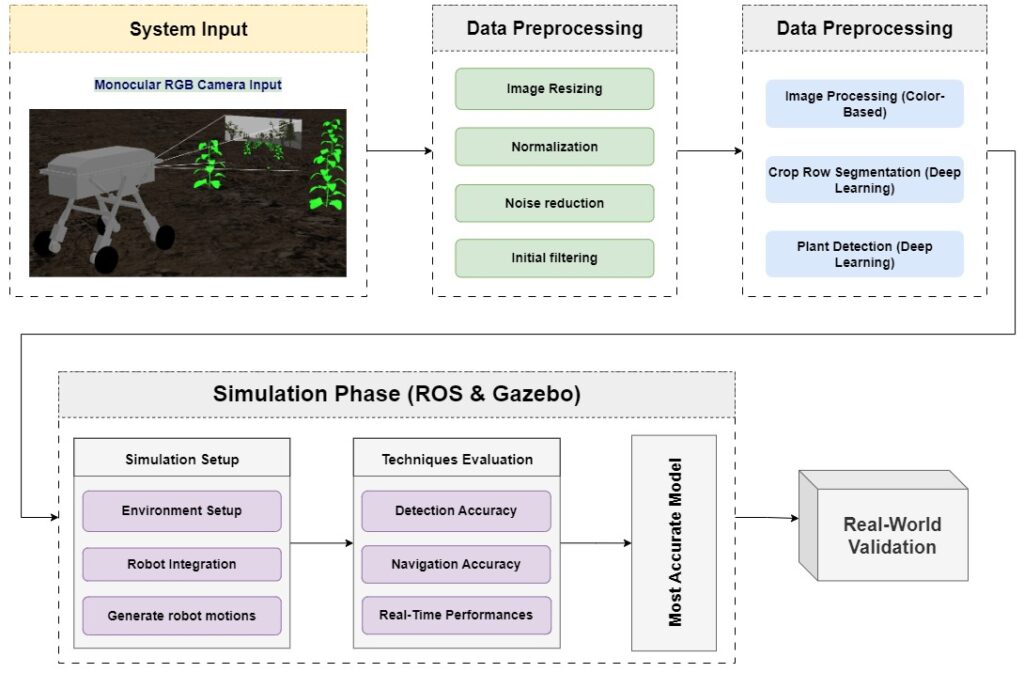

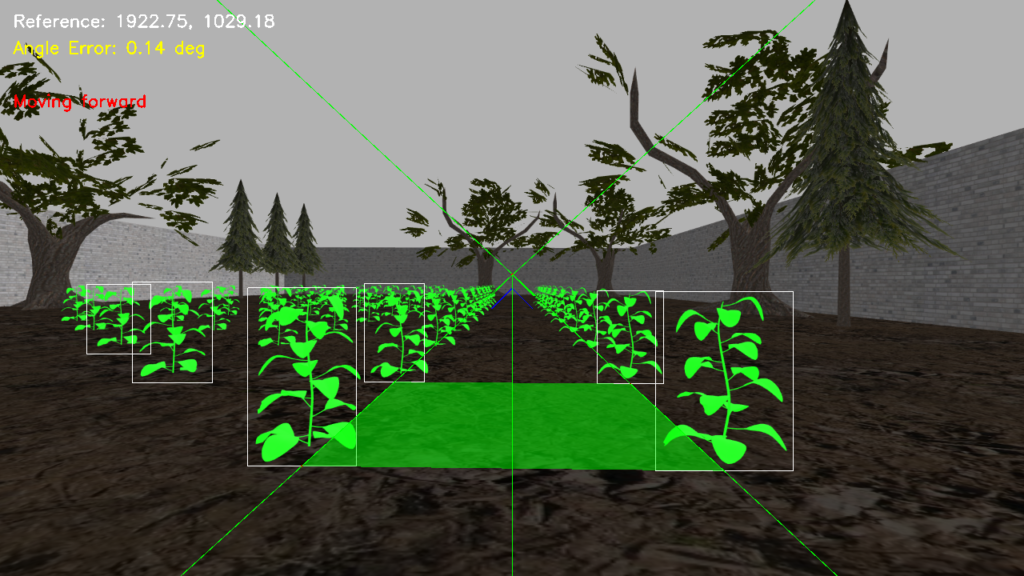

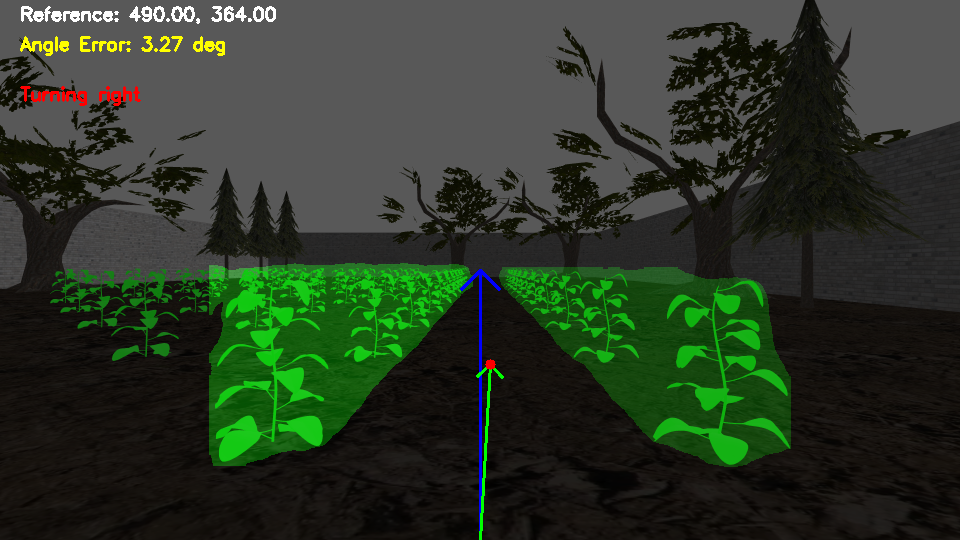

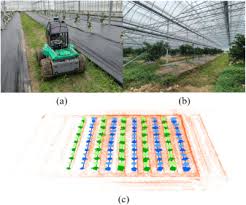

This project focuses on developing a cost-effective and efficient vision-based autonomous navigation system for agricultural robots. A four-wheeled robot, designed by the Robotics Team at the Electrical and Electronic Engineering Department of Ondokuz Mayıs University, is used as the test platform. The project integrates both classical image processing techniques and deep learning methods to evaluate navigation accuracy, processing speed, and robustness under varying environmental conditions.

Simulations are conducted using Gazebo and ROS, with plans to transition to real-world field tests. The study aims to provide a scalable, low-cost, and energy-efficient solution for small-scale farmers, enhancing precision agriculture through advanced perception and control systems.

🗝 Keywords: Precision Agriculture, Crop Row Detection, Autonomous Navigation, Deep Learning, Image Processing, CNN, ROS, Gazebo, Agricultural Robotics.

Completed - May 2024

ROS-Based Autonomous AgriBot

Description

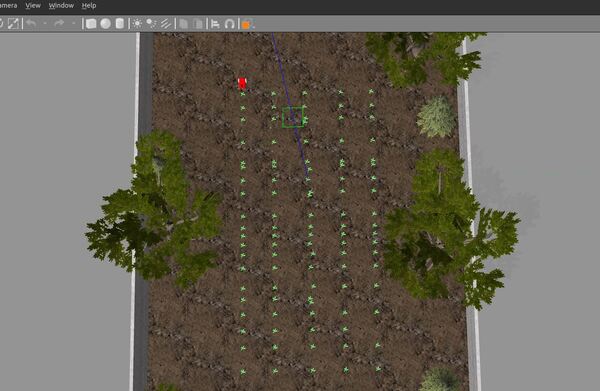

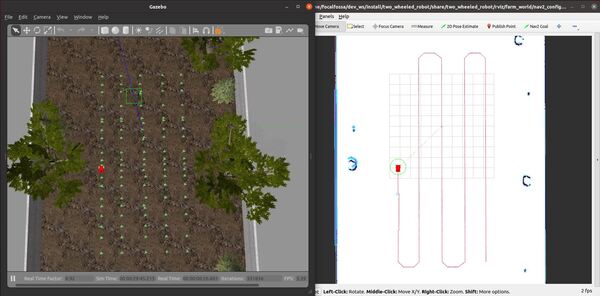

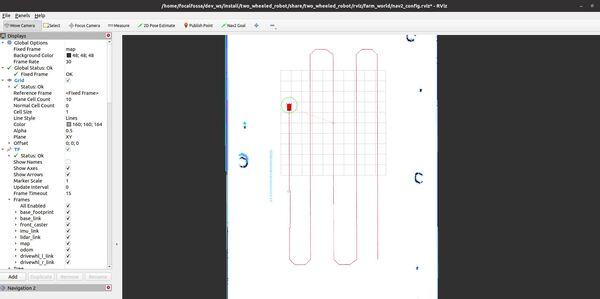

This project is based on the tutorial How to Send a Goal Path to the ROS 2 Navigation Stack – Nav2 by Addison Sears-Collins. The objective was to develop an autonomous wheeled robot capable of navigating within an agricultural field using LiDAR and the ROS 2 Navigation Stack (Nav2).

Using Python, a goal path was sent to the mobile robot in a simulated Gazebo environment. This prototype demonstrates autonomous agricultural navigation, paving the way for real-world implementations in precision farming.

🔗 Credit: Addison Sears-Collins’ Tutorial

🗝 Keywords: ROS 2, Nav2, Autonomous Navigation, LiDAR, Mobile Robotics, Gazebo, Python.

Ongoing

Visual SLAM and Semantic Mapping for Agricultural Robotics

Description

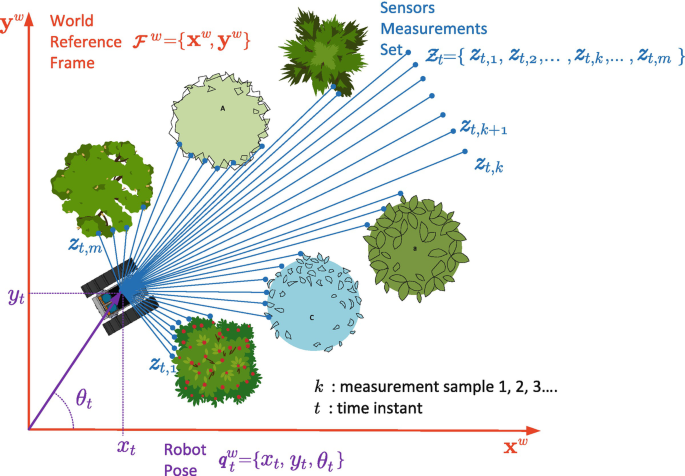

This research enhances autonomous agricultural robot navigation by integrating deep learning-based plant detection with Semantic SLAM. Unlike traditional RTK-GNSS and LiDAR-based localization, which are costly and less accessible for small-scale farmers, this approach fuses geometric and semantic information to create enriched environmental maps.

By leveraging object detection and semantic segmentation, the system differentiates between crops, obstacles, and pathways, improving autonomy and adaptability. Sensor fusion techniques, including an Inertial Measurement Unit (IMU), enhance localization accuracy, mitigating drift in challenging environments.

🛠 Research Methodology

- Semantic Mapping & SLAM: Implement Visual-SLAM with feature extraction, semantic segmentation, and object detection.

- Deep Learning-Based Plant Detection: Train CNNs and transformer models (YOLO, Mask R-CNN, Swin Transformer) using real-world datasets.

- Sensor Fusion for Localization: Integrate IMU and apply Visual-Inertial Odometry (VIO) for robust motion prediction.

- Experimental Validation: Evaluate performance through Gazebo/ROS simulations and real-world field trials.

🗝 Keywords: Visual SLAM, Semantic Mapping, Precision Agriculture, Deep Learning, Sensor Fusion, IMU, Object Detection.

Completed - Sep 2023

Autonomous Quadruped Robot for Survivor Identification

Description

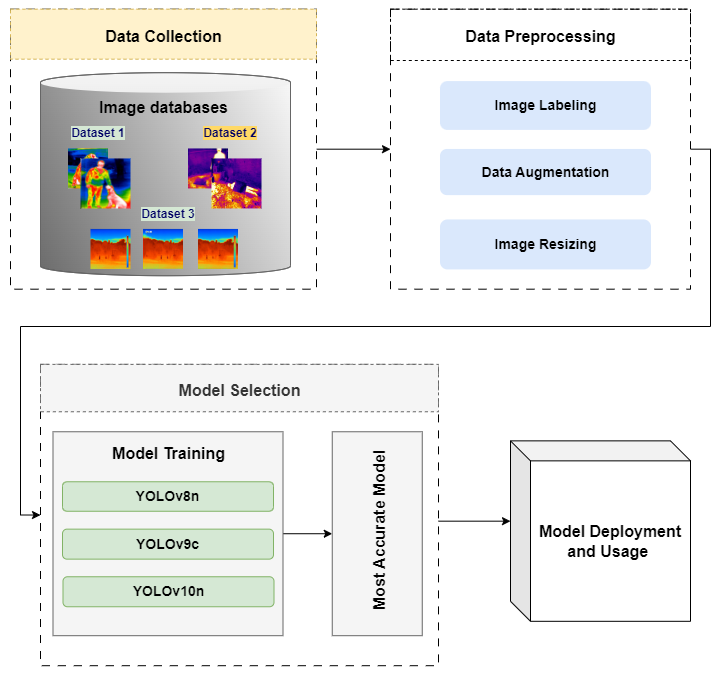

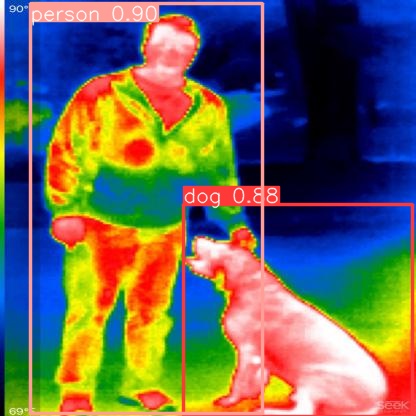

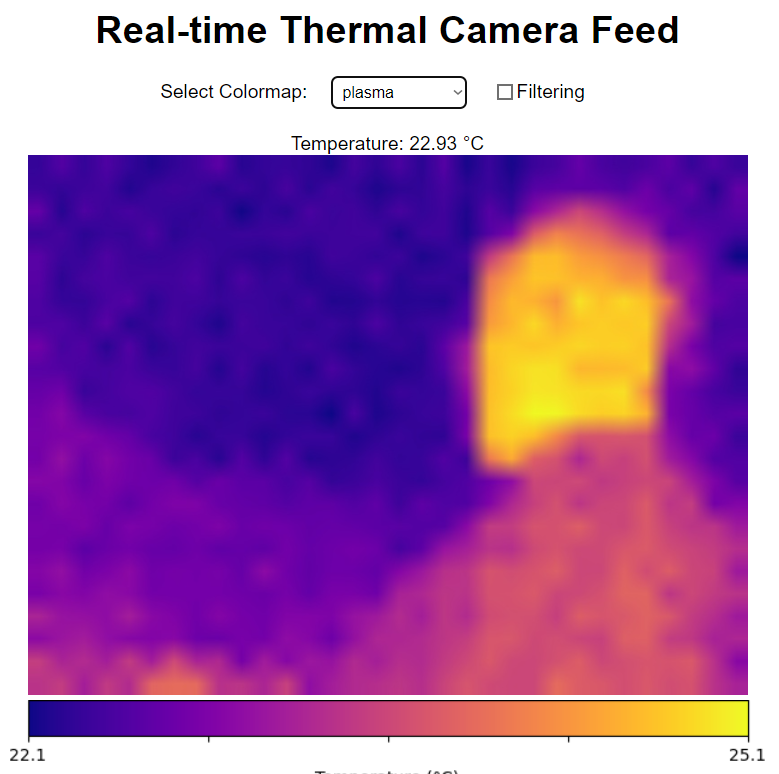

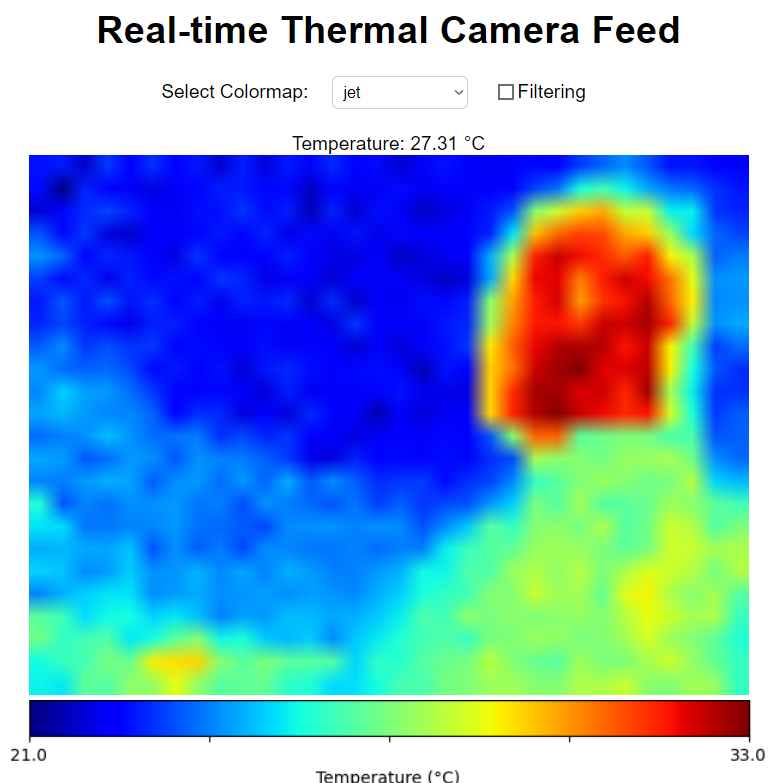

Developed as part of the university’s Robotics Team initiative, this project focuses on real-time victim identification using thermal imaging. A thermal camera (MLX90640) was integrated into a quadruped robot to detect humans and animals in low-light, smoke-filled, or foggy conditions.

The system, based on a Raspberry Pi, employs lightweight deep learning models for real-time thermal image processing. It provides an innovative solution for search-and-rescue operations, overcoming challenges faced by traditional RGB cameras and trained search dogs.

🗝 Keywords: Thermal Imaging, Search & Rescue Robotics, Quadruped Robot, Object Detection, Machine Vision, Real-Time Processing.

Completed - December 2024

Real-Time Crop and Weed Detection

Description

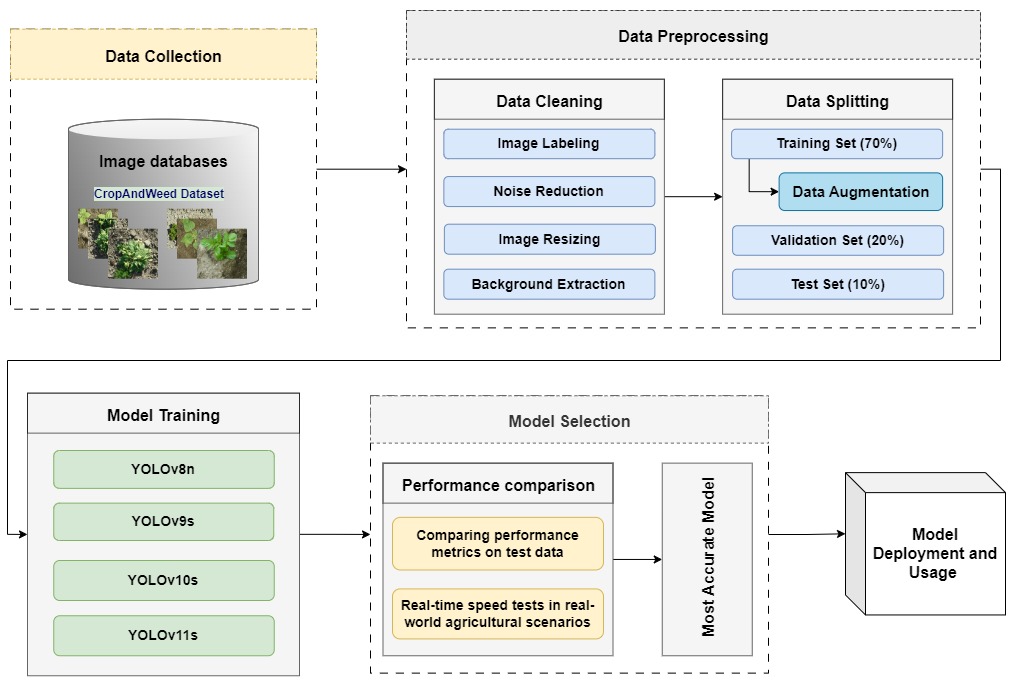

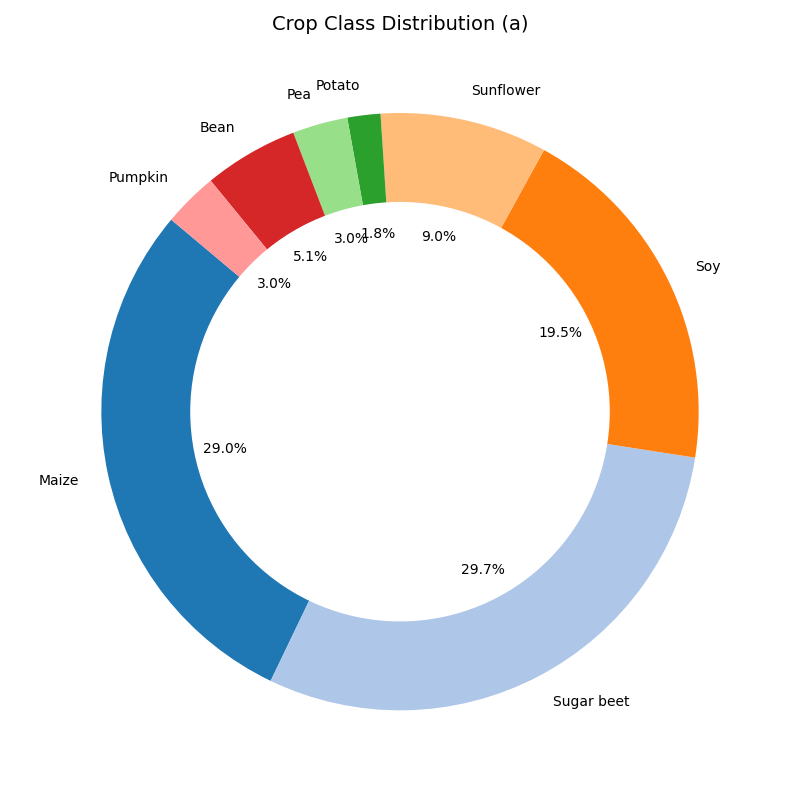

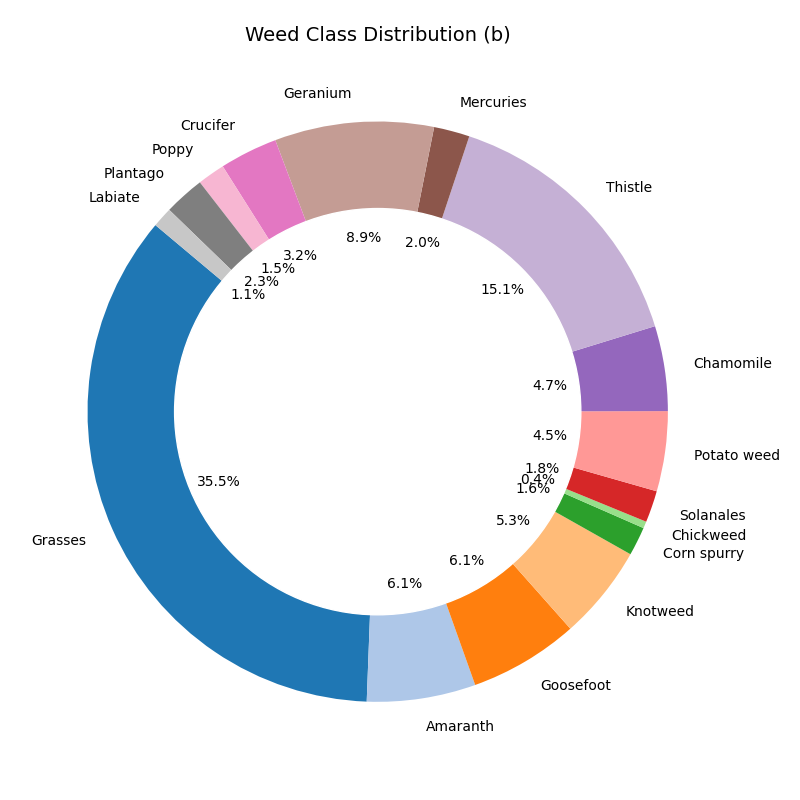

This project addresses critical challenges in modern agriculture, including labor shortages and climate change, by integrating AI-based precision farming solutions. A real-time crop and weed detection system was developed using advanced deep learning techniques, specifically YOLO (You Only Look Once) models. The system was trained on the CropAndWeed dataset (CAWD), incorporating diverse crop and weed instances under various environmental conditions.

To improve detection accuracy and robustness, extensive data augmentation techniques were applied, including flipping, mosaic augmentation, and color transformations. Additionally, a novel approach was introduced for generating synthetic agricultural videos by embedding segmented plant images into real-world recordings, allowing for a more comprehensive evaluation of real-time detection capabilities.

Experimental results demonstrated that YOLOv11s achieved the highest accuracy (mAP50 of 81.4%), outperforming YOLOv8n, YOLOv9s, and YOLOv10s while maintaining inference speeds exceeding 19 FPS. This research highlights the transformative potential of AI-driven precision agriculture, optimizing weed management and enhancing crop yields.

🗝 Keywords: Crop and Weed Detection, Deep Learning, YOLO, Precision Agriculture, Real-Time AI, Smart Farming.

Completed - April 2024

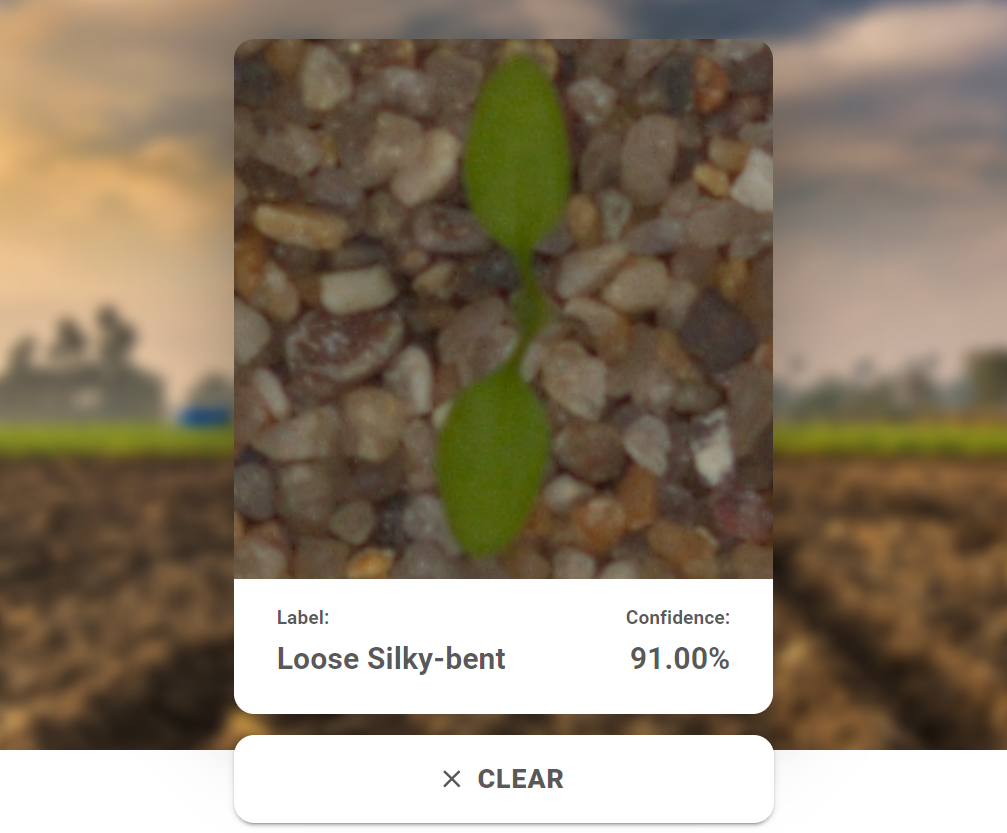

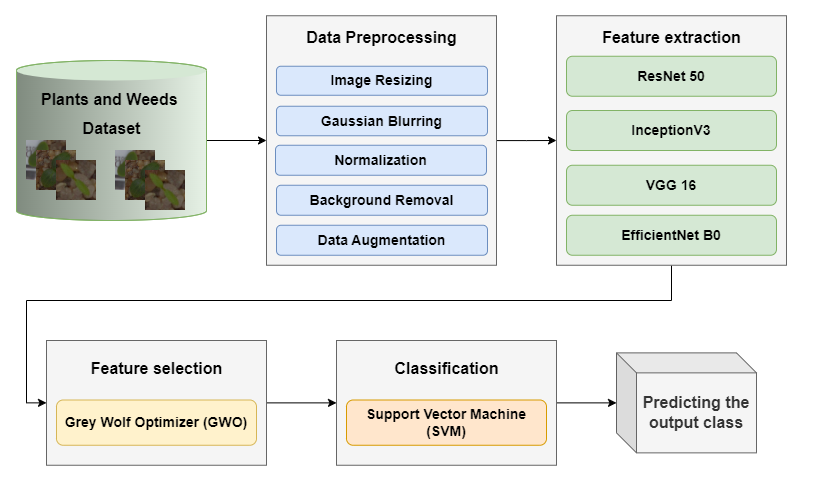

Web-Based Plant Seedling Classification

Description

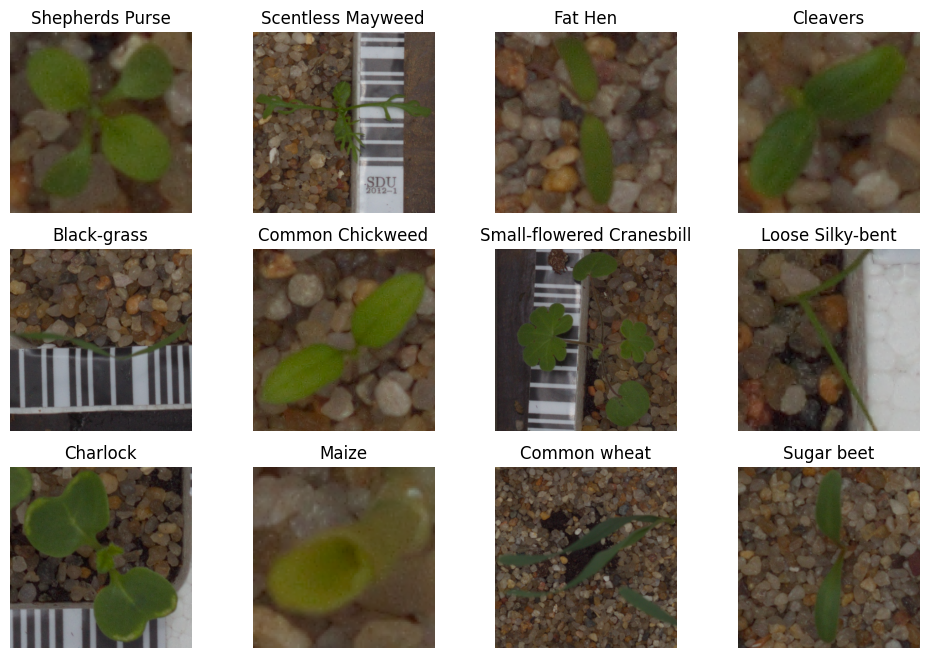

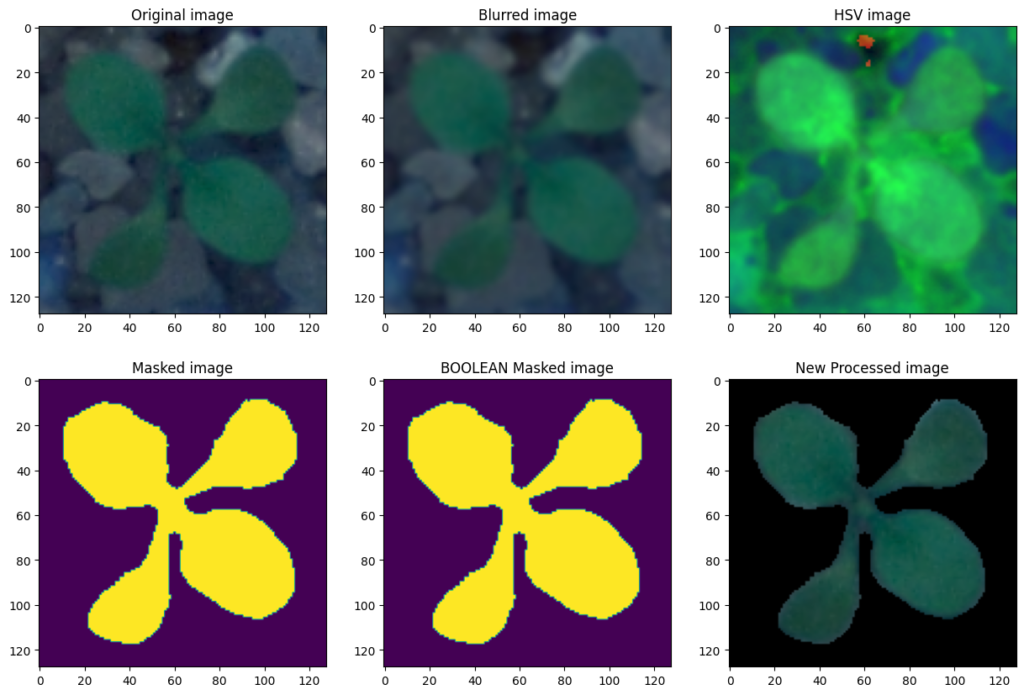

This project focuses on improving early-stage weed identification using AI-powered classification methods, addressing a major challenge in precision agriculture, particularly in emerging economies like Africa. A web-based application was developed for real-time plant seedling classification, integrating Convolutional Neural Networks (CNNs) with Grey Wolf Optimization (GWO) and Support Vector Machine (SVM) to enhance classification accuracy.

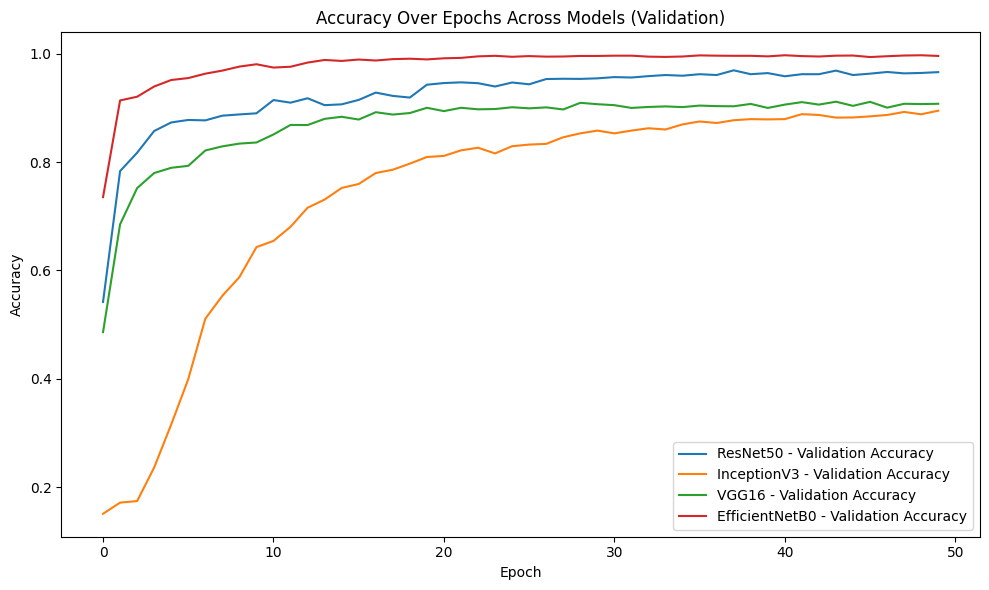

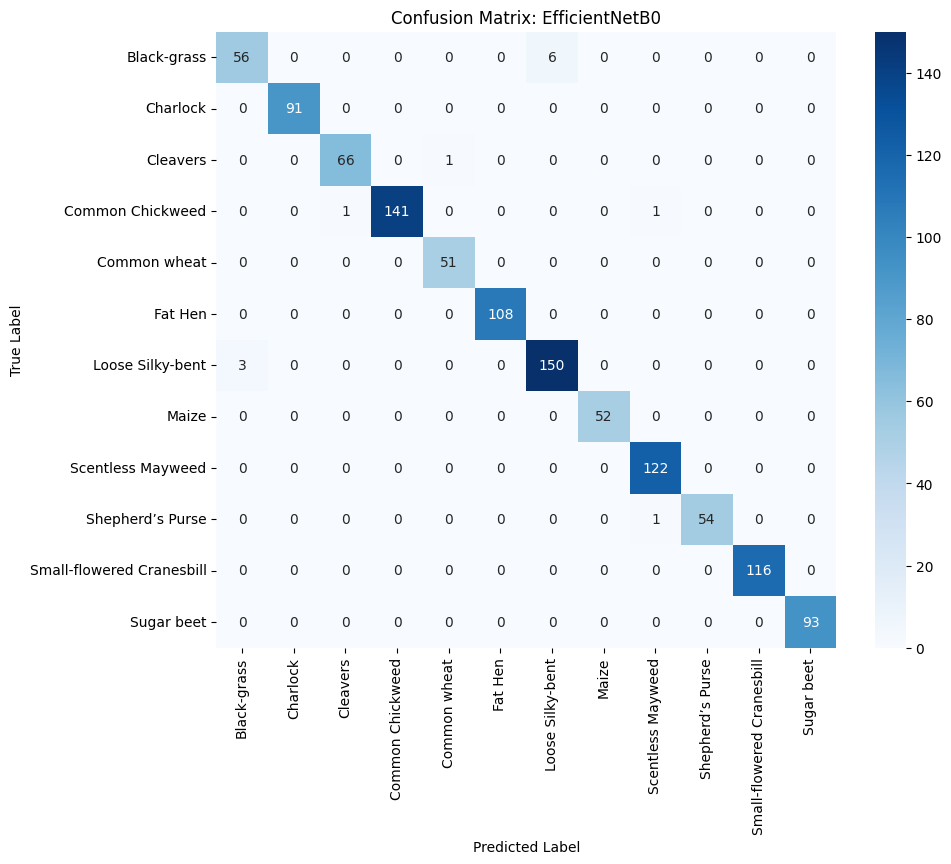

The model was trained on 5539 images spanning 12 plant species, including critical crops such as Common Wheat, Maize, and Sugar Beet, as well as nine weed species. Four CNN architectures were tested—ResNet-50, Inception-V3, VGG-16, and EfficientNet-B0—with EfficientNet-B0 emerging as the top performer, achieving 99.82% training accuracy and 98.83% test accuracy. The incorporation of GWO for feature optimization and SVM for classification refinement significantly improved the model’s efficiency.

The final model was deployed as a web-based application for real-time plant classification, making it a valuable tool for precision agriculture.

🔗 Live Web App: Plant Seedlings Classification

🗝 Keywords: Plant Seedlings, Classification, CNN, Grey Wolf Optimization, SVM, Deep Learning, Precision Agriculture, Weed Management.

Completed - January 2023

ROS-Based Autonomous Car

Description

This project focuses on developing a self-driving car prototype in ROS2, inspired by Tesla’s autonomous navigation features. The system enables the vehicle to follow lanes, classify traffic signboards using AI, and perform object tracking to adjust speed accordingly.

The project was implemented as part of an Udemy course led by Haider Najeeb (Computer Vision) and Muhammad Luqman (ROS Simulation & Control Systems). The ROS2-based self-driving system integrates deep learning, computer vision, and AI-based perception to create a simulated autonomous vehicle.

🔗 Instructors:

- Haider Najeeb (Computer Vision): LinkedIn Profile

- Muhammad Luqman (ROS Simulation and Control Systems): LinkedIn Profile

🗝 Keywords: ROS2, Autonomous Vehicles, Lane Following, AI, Object Tracking, Deep Learning, Computer Vision.

Completed - February 2022

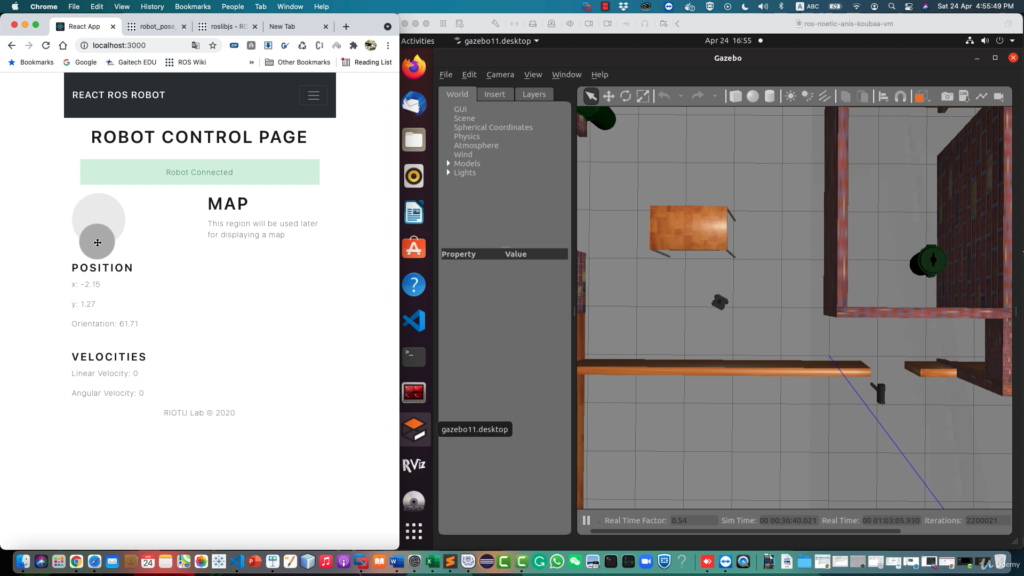

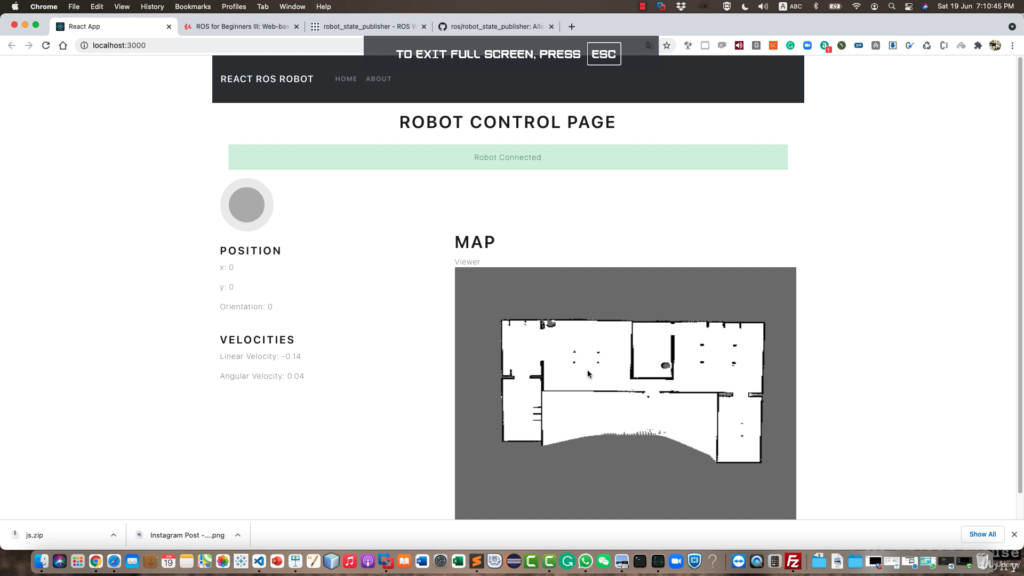

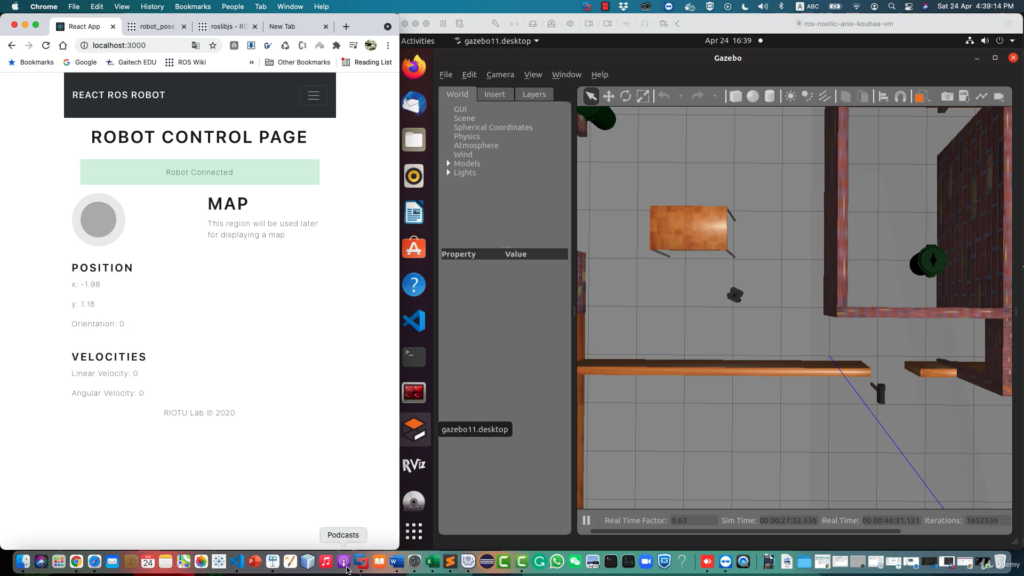

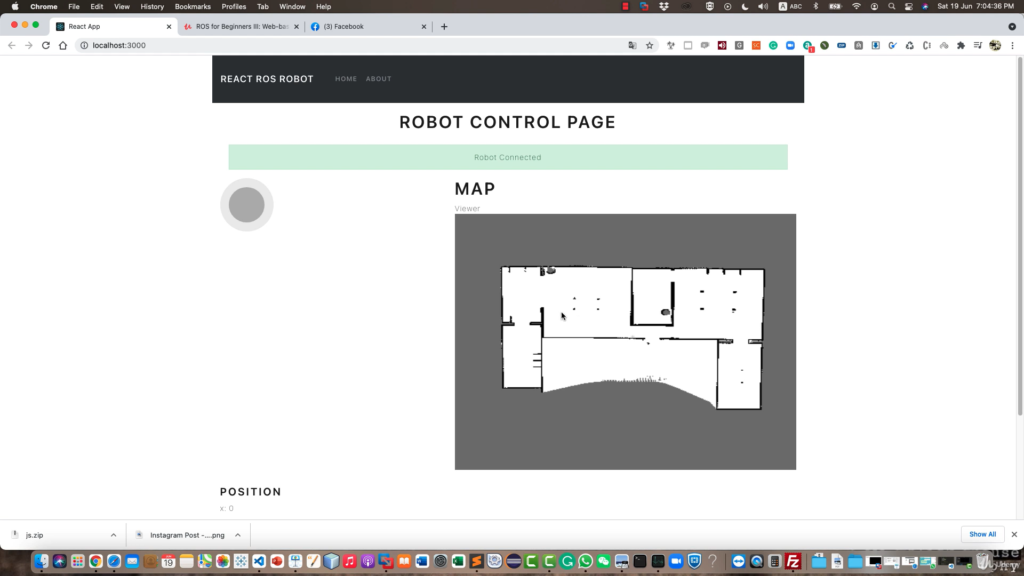

Web-Based Navigation for ROS-Enabled Robots

Description

This project focuses on developing a web-based interface for teleoperating and monitoring ROS-enabled robots. The web interface, built using ReactJS, allows users to control a robot remotely through a browser, providing real-time feedback on the robot’s position, orientation, and velocity.

The project was completed as part of an Udemy course by Anis Koubaa, titled ROS for Beginners III: Web-Based Navigation with ROSBridge. The system features:

- A connection status monitor to indicate whether the robot is online.

- A web-based joystick for teleoperation.

- An emergency stop button for safety.

- A live navigation map for setting goal locations and monitoring robot movements.

This project demonstrates how ReactJS and ROSBridge can be leveraged to create user-friendly web interfaces for robotic systems.

🗝 Keywords: ROS, Web-Based Navigation, ReactJS, Teleoperation, Robotics, ROSBridge, Human-Robot Interaction.

Completed - June 2024

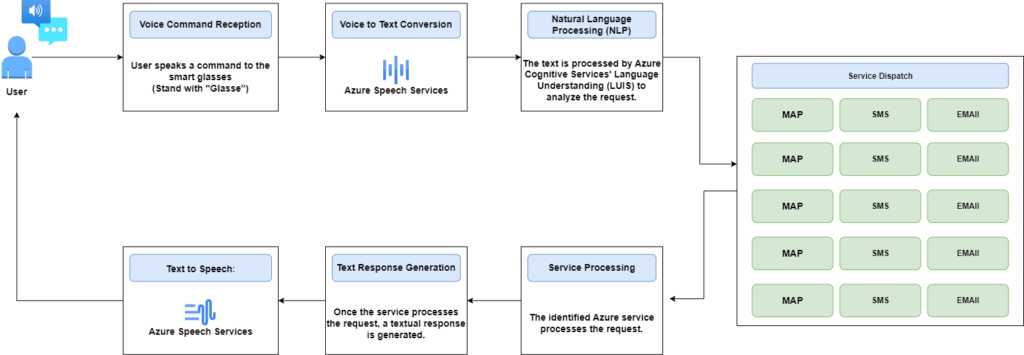

Visual Impair - AI-Powered Smart Glasses

Description

This project aimed to develop AI-powered smart glasses to assist visually impaired individuals in navigating their surroundings more effectively. The initiative secured a spot in the Microsoft for Startups program, receiving $150,000 in credits to access cutting-edge AI models and industry mentorship. These resources facilitated the development of advanced features, including real-time object recognition and speech-based interaction.

The backend of the application was built using FastAPI and deployed on Heroku. Key AI technologies integrated into the system include:

- OpenAI Whisper for speech recognition.

- GPT-3.5 for natural language processing.

- Google Cloud Vision API for image analysis.

- Google Text-to-Speech for speech synthesis.

The project was completed in collaboration with Jacques Asinyo and SEKPONA Kokou.

🔗 Website: OneVision+

🔗 Collaborators:

- Jacques Asinyo: LinkedIn Profile

- Kokou SEKPONA: Github Profile

🗝 Keywords: Assistive Technology, AI for Accessibility, Smart Glasses, Speech Recognition, Computer Vision, Natural Language Processing.

Completed - November 2024

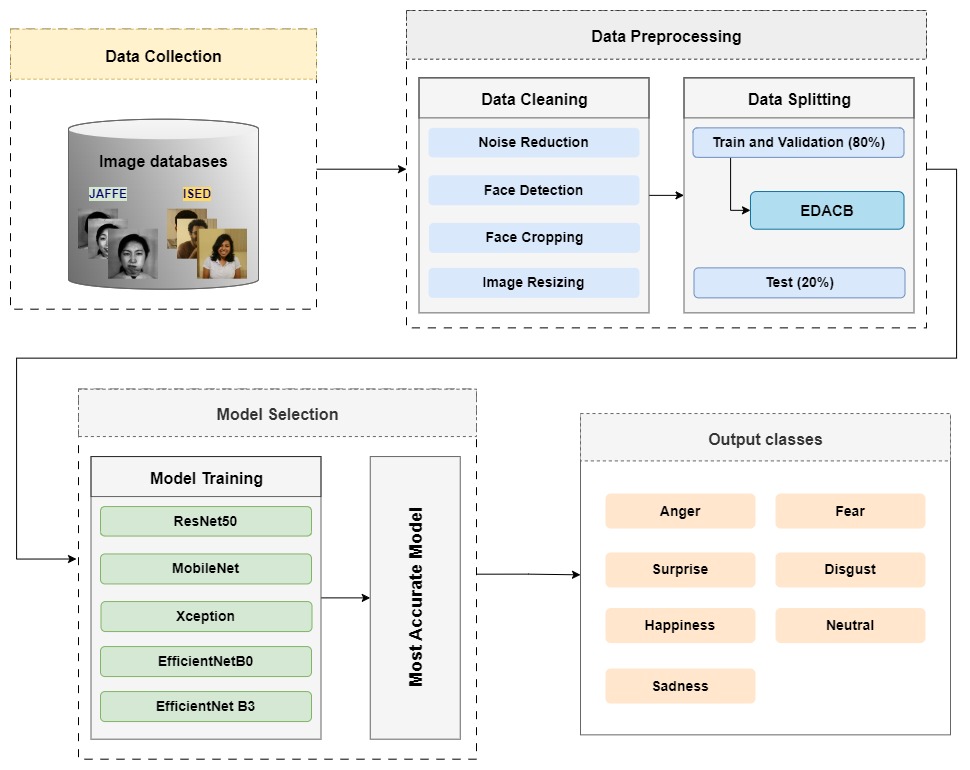

Face Emotion Detection

Description

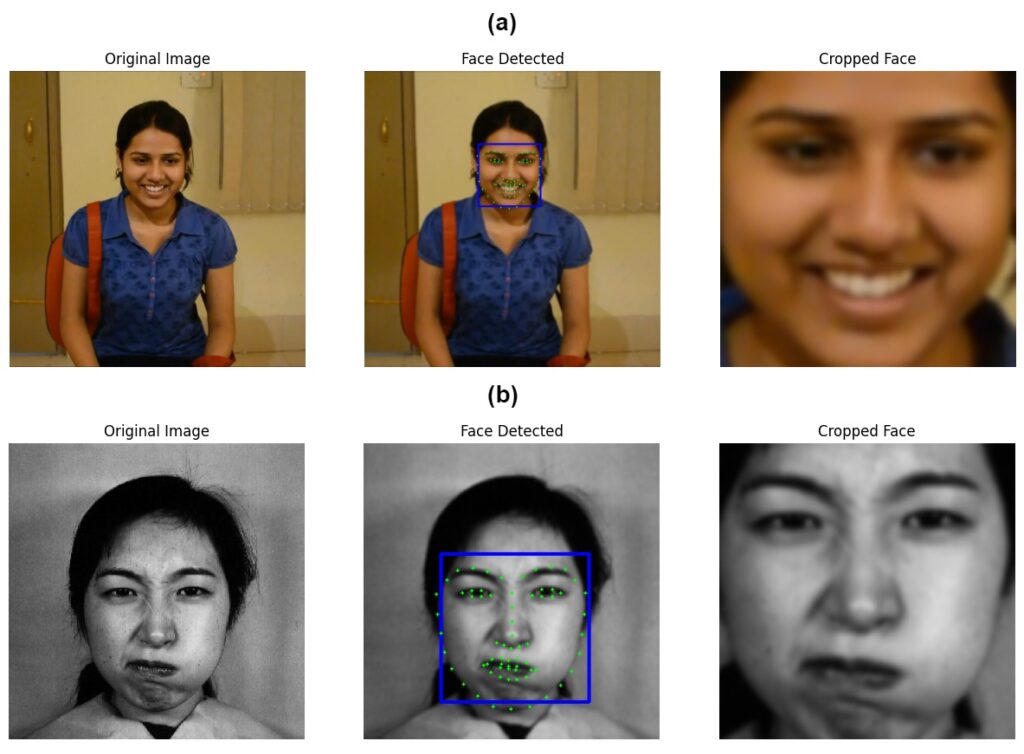

Facial Expression Recognition (FER) plays a vital role in enhancing human-computer interactions by accurately interpreting non-verbal expressions. This project tackles key challenges in FER, including cultural, individual, and contextual variations in facial expressions.

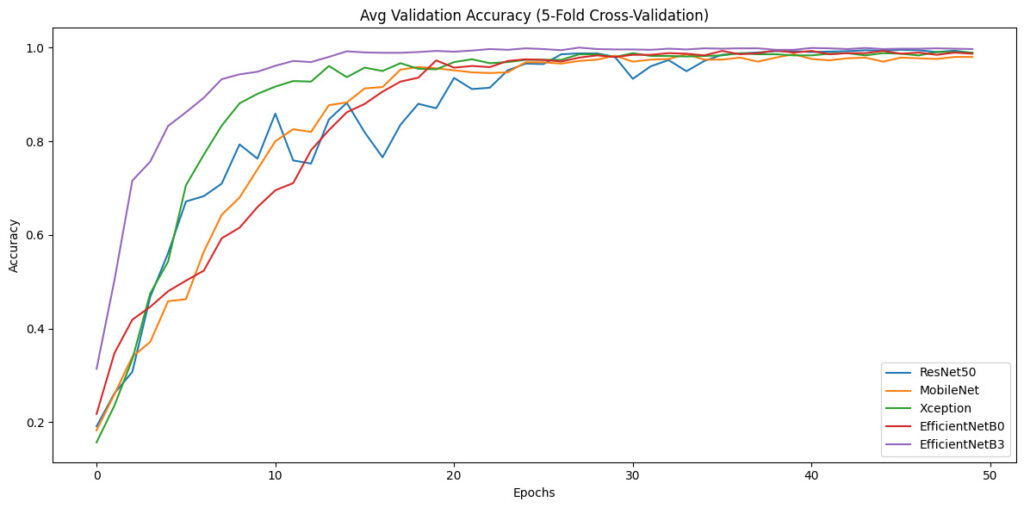

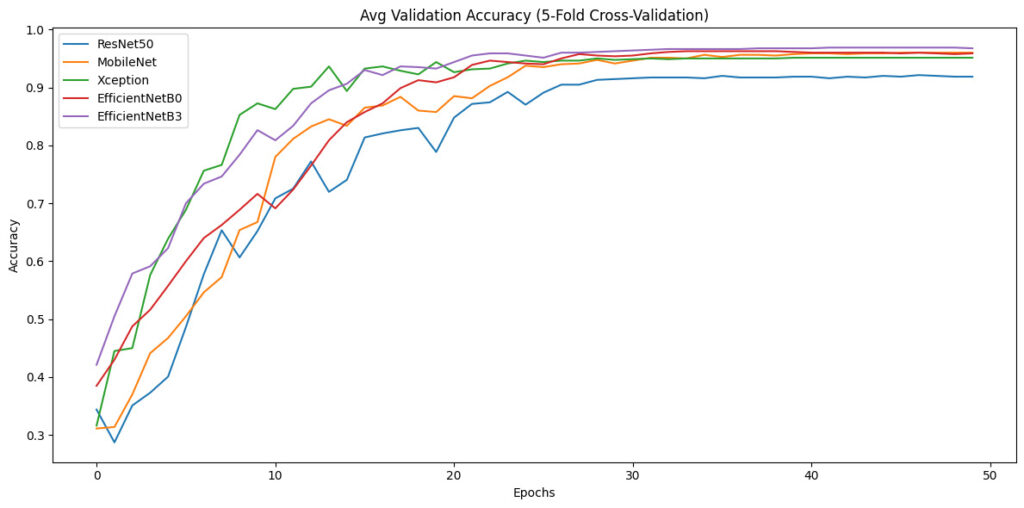

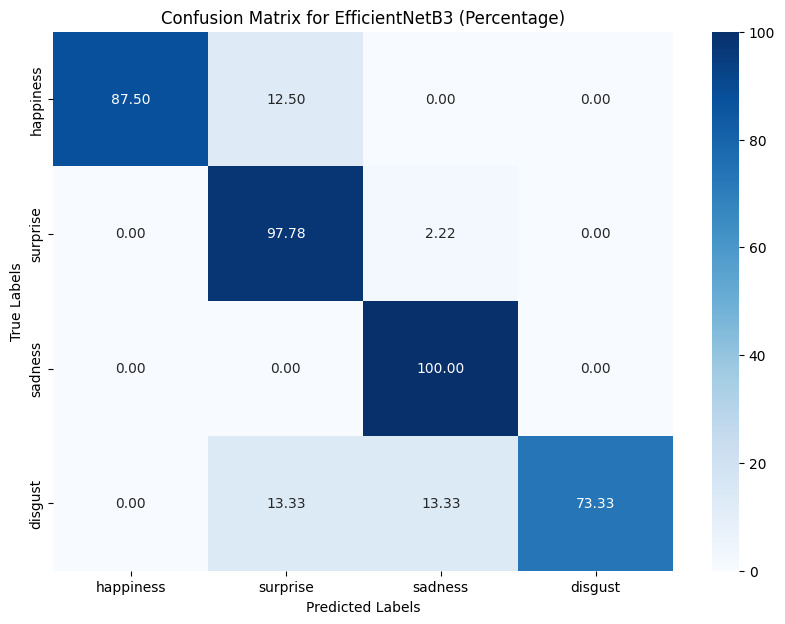

A deep learning approach was implemented using five pre-trained CNN models, optimized for FER tasks. To improve dataset quality and mitigate class imbalances, an Enhanced Data Augmentation and Class Balancing technique was developed. The models were evaluated on two distinct datasets:

- JAFFE (Japanese Female Facial Expression) – controlled facial expressions.

- ISED (Indian Spontaneous Expression Database) – spontaneous expressions.

Among the tested models, EfficientNetB3 achieved the highest accuracy:

- 98.44% on JAFFE

- 91.86% on ISED

Comparative analysis with existing research demonstrated that this approach outperforms previously reported accuracy levels, making it a robust and effective solution for real-world FER applications.

🗝 Keywords: Facial Expression Recognition, Deep Learning, EfficientNet, Data Augmentation, JAFFE Dataset, ISED Dataset.

Completed - June 2021

Mobile App-Based TFE (Bachelor’s Thesis Project)

Description

🏆 First Prize Winner – Intelligent Robot Design for Object Detection and Measurement (June 30, 2021, Lokossa, Benin)

🔹 Description

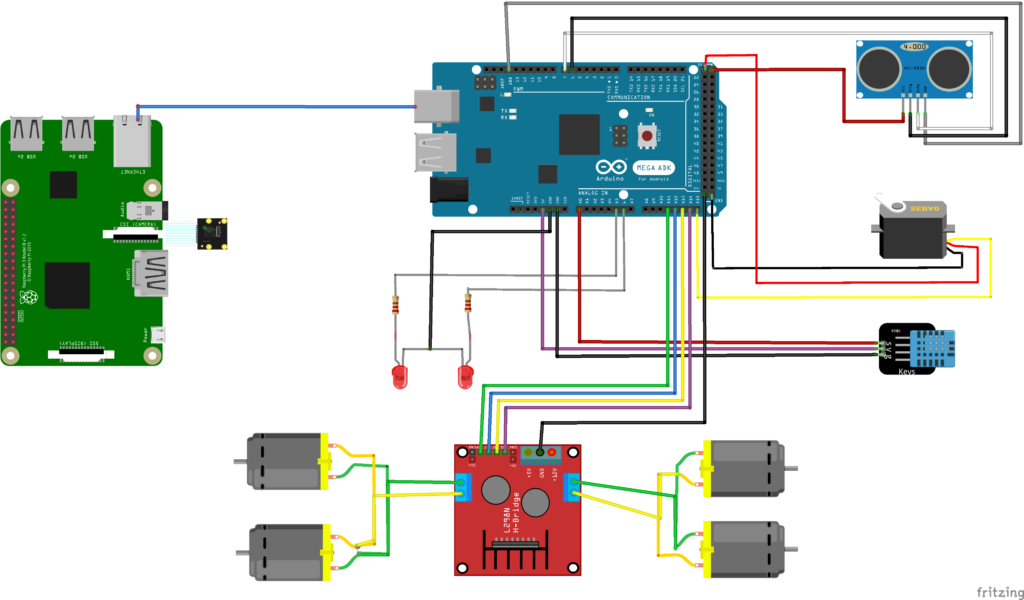

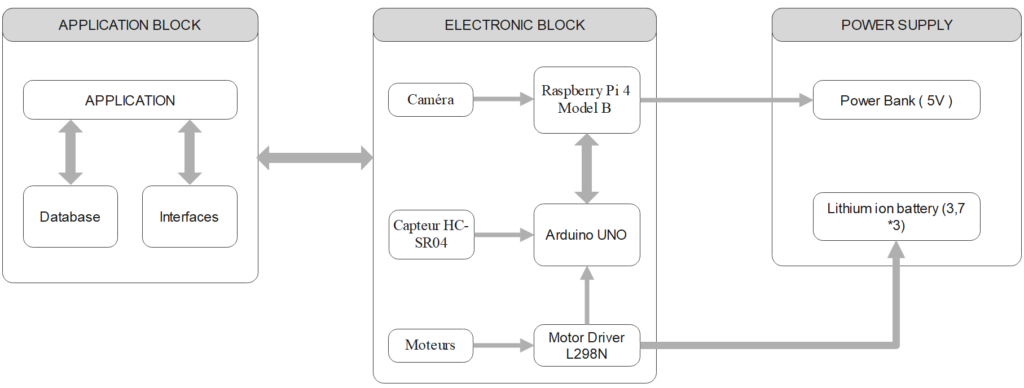

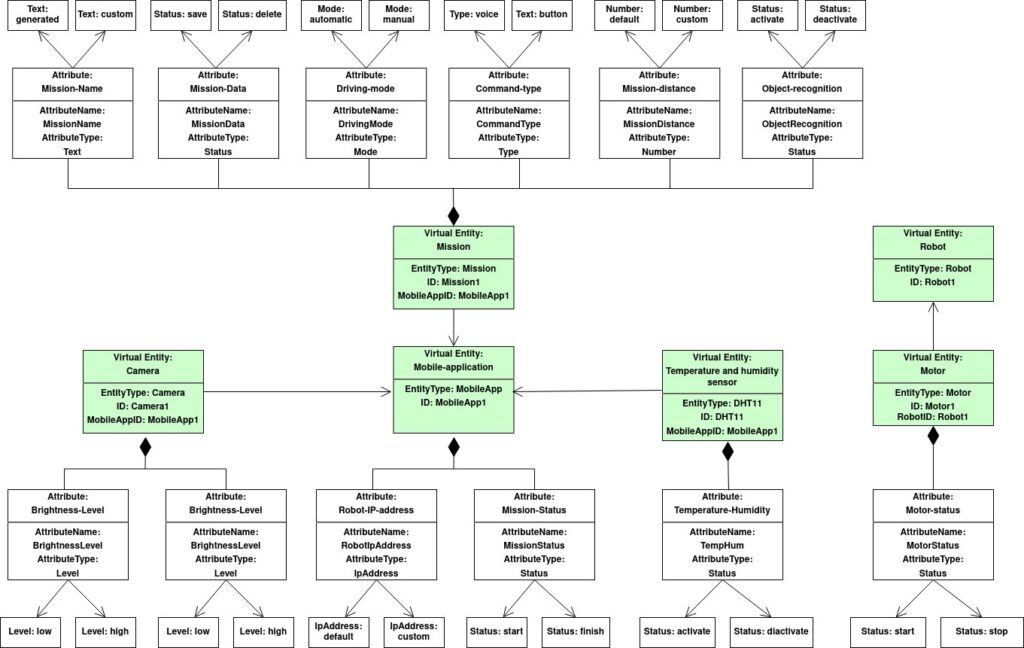

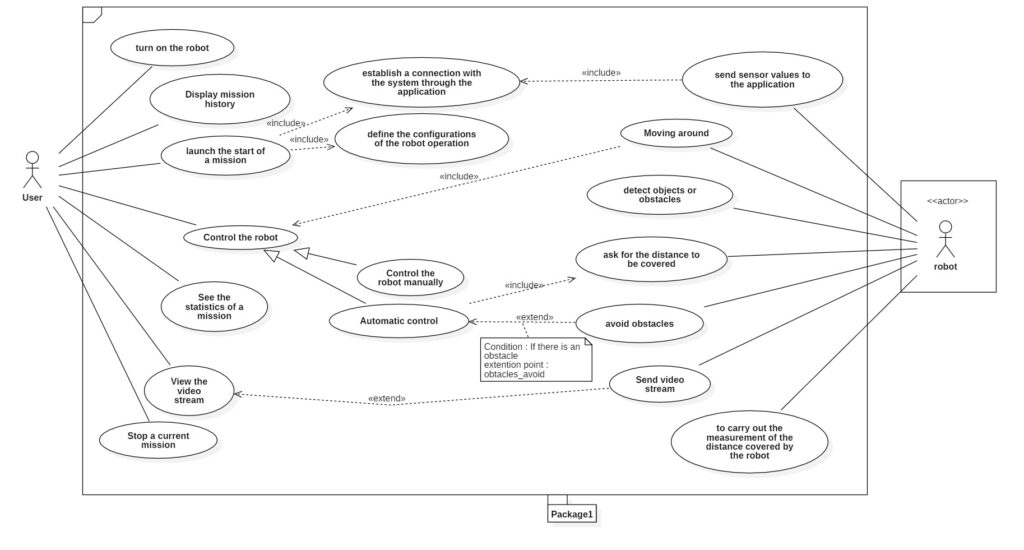

This project aimed to design and develop an intelligent robot, controlled via a mobile application, for real-time object detection, path measurement, and environmental data collection (temperature & humidity). The initiative addresses the high costs of robotic solutions in developing countries, proposing an affordable alternative for exploration missions, military applications, and surveying.

The system was developed using:

- OpenCV for object detection and recognition.

- YOLO (You Only Look Once) for real-time object detection.

- Raspberry Pi for embedded processing.

- Wi-Fi-based communication between the robot and the mobile app.

- UML modeling for system design.

The robot can autonomously avoid obstacles, measure distances, and transmit real-time video feeds to the mobile app. Future developments include expanding the prototype into a full-scale autonomous exploration robot.

🗝 Keywords: Exploration Robotics, Object Detection, YOLO, Embedded Systems, Mobile Robotics, Environmental Sensing.

Completed - March 2022

Ag4All – Agricultural Management Platform

Description

🔹 Description

As part of a 9-month professional internship at ART CREATIVITY (Abomey-Calavi, Benin), I contributed to the development of Ag4All, a mobile application for agricultural management. This platform enhances connectivity between farmers, input suppliers, agricultural service providers, and aggregators.

My key contributions included:

- Subscription Management – Implementing features for user subscription handling.

- Marketplace Development – Enabling seamless transactions between agricultural stakeholders.

- Crop Management System – Providing tools for farmers to monitor and manage their crops efficiently.

🔗 Download the app: Ag4All on Google Play Store

🗝 Keywords: Agriculture Technology, Mobile App Development, Marketplace, Precision Farming, Smart Agriculture.